Brainstorming GenAI Use Cases, Document Automation, Prompt Engineering Tips and More! Happy Friday!...

Lawyers Will Play a Crucial Role in Development and Adoption of AI Powered Applications

Will the adoption of AI powered tools be any different than the adoption by legal teams of any other type of legal technology?

Document automation has been available for decades and it is still not widely used even though the technology is very advanced. Changing legal matter workflows can be difficult and even tools that are easy to use, save time and improve quality of work product are not always adopted. Many solutions run up against change management problems that are somewhat unique to the legal industry, including law firm management structures (see my previous post that highlights John Alber's brilliant archipelago analogy), high pressure on lawyers to produce perfect work product under crazy time constraints and the billable hour model of pricing and compensation.

This time feels different with the recent hype around Generative AI because lawyers and law firms appear to be genuinely interested in learning about the new tools that are becoming available in order to keep pace with their competitors. The rubber will meet the road over the next few years (which seems like a long time given what has happened in the last year) when we see whether legal teams advance from broader generative AI use cases like summarization and legal research and attempt to implement production ready solutions for specific pain points that significantly change the way legal teams complete work.

In order for this to happen, lawyers and other subject matter experts will need to engage with the technology and play a key role in developing and testing the solutions. They will need to dig in and understand what large language models and generative AI tools excel at and what they are not good at. Armed with this skill, the most creative lawyers will be the ones that work with programmers and vendors to create the magic solutions that will change the way law is practiced in the future.

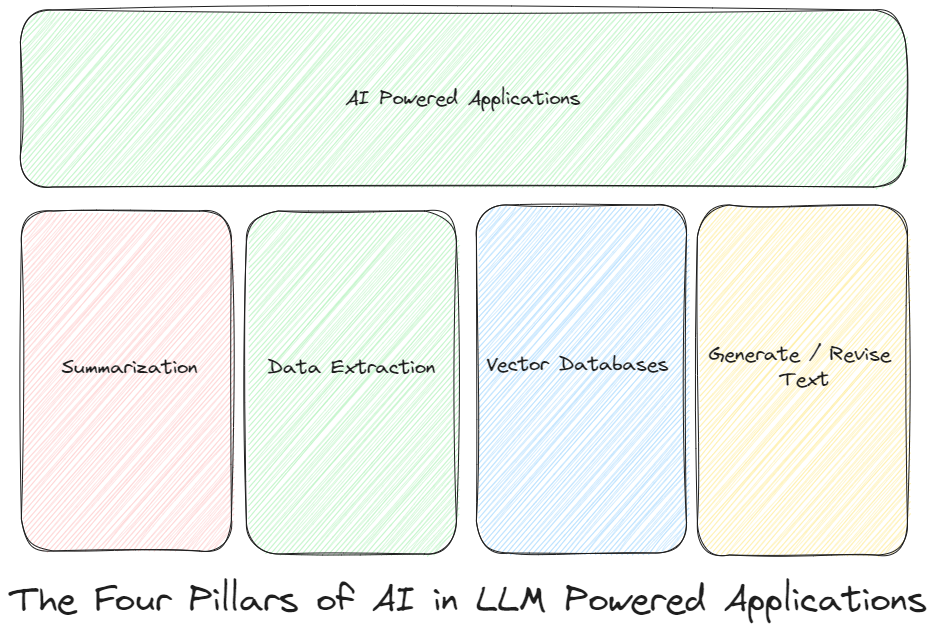

In this post we will review (i) what large language models (LLMs) are good at, (ii) the practical limitations of LLMs, (iii) the connectors (people and tech) necessary to build apps and (iv) why lawyers are the key to it all. My hope that this is a good primer on the subject for lawyers that are curious and want to think about building, using and testing solutions that can change the future of their practice.

What are Large Language Models (LLMs) Good At?

There are a number of large language models that are now available to developers to build applications. No two models will behave exactly the same when asked the same questions and costs vary widely. Some are better and more cost effective for tasks such as creating embeddings (ADA) and others excel at summarizing text or extracting data or even writing code (GPT 3, GPT 4 or DaVinci). In this post we will not focus on any particular model or prompt engineering but more broadly cover some of the things LLMs do well so the reader can think creatively about use cases they encounter in their own practice. Although there are many effective ways to deploy LLMs, we will focus on summarization, semantic search / embeddings, revising text and extracting data, as those are most relevant to legal tech and most transactional practices.

Summarization

LLMs such as GPT3 and GPT4 are very good at reading and writing. This is something important to keep in mind. They probably read and write better than most lawyers (from a technical standpoint). If you ask them to follow a set of instructions that are clear and require reading and writing text, they generally will do a great job.

For this reason, they work very well out of the box at summarizing text. You can send a legal document (subject to size limitations we will discuss later) into the LLM and ask for a summary and you will generally get a pretty cohesive and accurate summary. This will likely be useful in transactional practices for summarizing agreements such as leases, contracts and other documents. Machine learning has been available for this task in the past, but I think the huge difference with the new availability of LLMs is you don't need to do as much specific training and programming to get pretty useable results. It will only get better.

Hallucinations that were common in the context of research type assignments (as were made famous by the lawyer that cited a fake case provided by Chat GPT in a brief) are less of a problem with summarizing text as you are passing the text and grounding the LLM in those facts with your prompts.

Revising or Generating Text

LLMs are also pretty good at revising text. Results are not always consistent, but it can be a good tool to use when drafting. For example, you could pass it a clause and ask it to make it more friendly to the Buyer as opposed to the Seller.

LLMs are also particularly helpful if you have a blank page and need a starting point. If you have a form or precedent language that will still always be preferable, but LLMs have been trained on a bunch of public documents, so you can take a shot at asking it to write something to get you started. It is very important to check any generated text as you could get into hallucination type problems.

Based on my experience so far, these use cases are not quite production ready, but it will get better as LLMs are trained on more and more legal data and they are fine-tuned with client / firm specific information.

Extracting Information

LLMs are really good at extracting information from free form text (even if OCR'd from a PDF). This extracted text can then be loaded into a more structured database table or passed to an application to perform tasks with that information (like document automation). This is probably the most production ready use of LLMs right now. This also solves one of the biggest problems that is holding back many legal applications - the lack of quality structured data.

In the context of a loan transaction, you could use prompts to extract information like the maturity date or the loan amount from a set of loan documents and then feed it into a structured summary using legacy technology like document automation tools.

You could also pass the LLM a document and ask it to classify it. For example, is the loan document a promissory note or is it a deed of trust. This should become standard in most DMS systems where right now most documents are classified as "Document" or "Assignment" (because it is the first choice). With accurate classification of documents, you can then do next level prompting since you know what type of document it is. Recently Zuva / Litera released a product that appears to be very helpful with this task.

The best part of this use is that the data extracted can then be verified before it is used (either by a human or by an algorithm). This should result in high quality applications.

Semantic Search / Embeddings / Vector Databases

Last but not least, the availability of LLMs has enabled more access to vector databases and embeddings. While this is not Generative AI, this is probably one of the most important building blocks for LLM powered apps because it allows you to quickly search for relevant information to feed into the tasks described above.

You can dive into a more technical overview if you have time, but at a high level, embeddings allow you to pass a paragraph of text to an LLM (ADA is probably the best bang for the buck but there are others) and get back a vector (think detailed number description of the words). You can then store that vector in a vector database (special types of databases for vectors). You can apply this technique to a large amount of text or documents. Then when you want to search for something, you just turn the search phrase into a vector and then you can get back the closest matches.

For example, if you want to extract the lease expiration date from a bunch of lease documents, you don't need to pass the entire document in to the LLM to extract the date, you can first search for the relevant clauses that contain the expiration date concept using semantic search and then extract the information from only the relevant clauses.

Semantic search is also really helpful for building clause search databases or other more general knowledge management solutions that would have been more difficult without this tool.

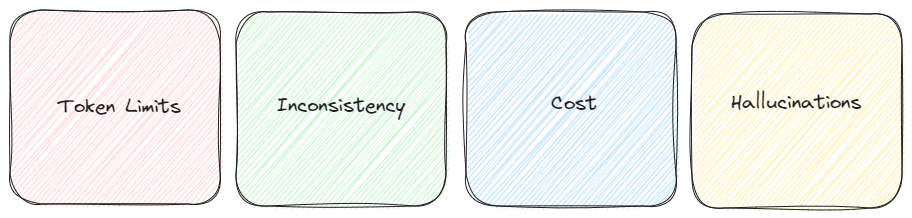

Practical Limitations of LLMs

There are several key limitations to building production ready applications powered by LLMs. These need to be mitigated by a combination of technology, programming and creativity until LLM technology improves (which may happen soon in some cases).

- Token Limits - Models have limits to the amount of text that can be passed in and this includes the instructions. You may have something working for a smaller (10 - 20 page document) but if you need to be able to review a 100 page document then you need a strategy to break it up and put it all back together without losing context.

- Cost - Newer models like GPT4 and Davinci that perform better and have larger token limits are more expensive by a decent amount. This may be worth it for complicated high value tasks, but developers need to be mindful of how much running prompts will cost in the context of the price they are able to charge for the solution (and the time being saved). It is important to not pass irrelevant text or use a more expensive engine where it isn't needed.

- Hallucinations - If you ask open ended questions and do not ground the prompts with facts or trusted context, you can get "hallucinations" where the LLM makes something up.

- Inconsistency - If you do not give very specific instructions and give examples of the expected output, you can get very inconsistent results. This is not ideal for tasks that require precision (like drafting off a form or precedent).

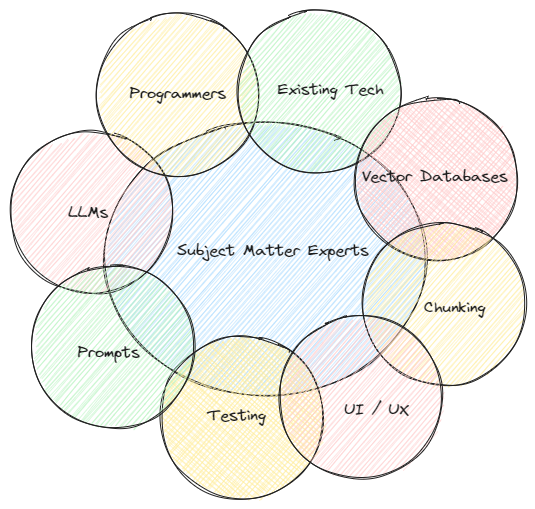

Connectors Required to Make AI Toolkit Effective in Real World Applications

The good news is that in many cases, the practical limitations of LLMs can be reduced by applying "connectors" to bridge the gaps.

Existing Technology Stack

The first key connector is the existing technology stack - databases, web applications and other specialized tools like document automation platforms. Basically everything that already exists and works well and is also what users are used to interacting with on a day to day basis. That doesn't need to change.

LLMs are not going to do the entire task for most production applications for specific use cases. They will need to be interacted with to do tasks or parts of the tasks that the current technology stack cannot do. They may also eliminate the need to write code or make data structures, but there are a lot of things that current tools like databases, web applications and document automation tools still do better than LLMs, so those tools should still be used to do what they are good at.

It is important to remember that there is no need to incur cost using an LLM unless it can do something existing technology cannot do already or cannot do without a lot of programming effort.

Programmers Leveraging Legacy Programming Techniques

The second connector is old school programming techniques using languages like C sharp or Java. This will be necessary to combine the existing technology stack with the LLM prompts and their responses. These strategies include:

- Chunking Documents - Programmers can break apart documents into smaller "chunks" of text that meet token limits and then put it all back together. This is an art and is key to the effectiveness most applications built using LLMs.

- Vector Databases - Context can be stored in vector databases and then retrieved to be used in prompts.

- Dynamically Building Prompts - By including context dynamically in prompts you can greatly improve the accuracy of information returned by the LLM.

- String Together Tasks in Specific Order - Programmers can carefully build the sequence of events that may include a combination of database calls, prompts, manipulation of text and other techniques.

- User Experience - The whole experience should seem like magic to the user. Part of the beauty of ChatGPT is the very simple chat interface. To solve specific problems, there will need to be programming behind the scenes that makes the magic.

Subject Matter Experts

The third and final connector is probably the most important - the subject matter experts. They will play a key role in several aspects of developing solutions:

- Creativity - They know what their pain point is and they know how they do the tasks without technology. They will also likely have ideas on how the programmers should break the tasks up into steps and what is OK and what is not based on real world examples.

- Identifying LLM Limitations - For example, in the simple example of summarizing a document like a lease, a lawyer that has reviewed a number of leases will immediately ask if the LLM can combine the summary to take into account changes to the underlying document in amendments. This is a simple example, but the devil is definitely in the details. It is one thing to show a summary of text, but to make it useable and replace or aid a lawyer to create final work product all of these details need to be considered. Some of them are hard and are not easy to identify without the subject matter expert.

- Write and Test Prompts - Prompt engineering is going to be one of the most important skills in the coming years. It is not a programming language and the best prompts are the ones that are the most specific. Lawyers are great at paying attention to details and the best prompt engineers will be subject matter experts.

- Provide Real Examples - One of the key limitations of LLMs is they are not always consistent. This can be mitigated by providing context in the form of examples. The more the better and lawyers and other knowledge management professionals will have the most access to good quality examples that can be used to make the magic work correctly. Without this most applications will probably fall short.

- Verify Results - Another important role for the subject matter expert is to verify the results of the application. This is no different than any other legacy technology, but since LLMs are a bit of a black box and not always consistent (or controllable by programmers), the subject matter expert will need to carefully review all output and provide feedback when things don't go right so it can be fixed.

All of the connectors listed above are important to developing quality applications powered by LLMs, but subject matter experts (lawyers and knowledge management folks) are the key to it all. They should be involved in almost every step of creating an application and without involvement from an expert like a lawyer or a knowledge management professional there will be a missing piece in the development of the application that will not happen until it is released. Then it might be too late.

To do this effectively, they will need to dig in and understand, at least at a high level what the technology can do well, what the limitations are and how to put it all together with programming. The great thing about LLMs as opposed to legacy technology is that subject matter experts can build and test some of the pieces (the prompts) without needing to ask a programmer to write code and compile it. This lets the programmer focus on the technically hard parts of the project and allows the subject matter expert focus on the output and non-programming aspects. If done correctly, it should lead to a much tighter development cycle.

Hopefully this post inspires some lawyers to dig in and learn the technical side of how to build with LLMs and come up with some great ideas!

.jpg?width=50&name=Basile_Matthew_J-(1).jpg)